Neural Network Trained by Genetic Algorithms for Enemy Behavior in Video Games

In this research project done in pairs, we wanted to study about Neural Networks, how did they work and which applications could they have in Video Game development.

During our research we discovered Neural Networks trained by Genetic Algorithms, which are capable of progressively learning to solve a certain task by giving it rewards and punishments in the form of a score.

We wanted to test if it was possible to create Enemy AI behaviors for videogames using exclusively this approach instead of classical AI structures such as state machines or behavior trees. And finally compare both approaches to see which one is best suited for Video Game development.

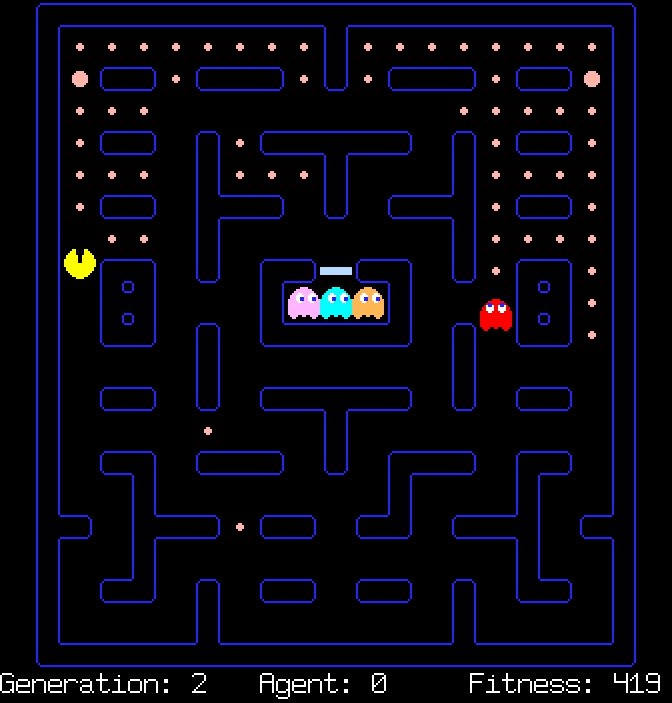

For this research we implemented a Pong AI using this method, and later would expand the investigation into a larger set of data and choices by creating a version of Pac-Man which Ghosts have been trained by a Neural Network (spoiler of the paper: they also needed a Pac-Man player trained by a Neural Network in order to be trained in the first place)

Throughout this project I encountered and solved different interesting problems which are all documented in the research paper below.

Summary of the Project and Conclussions

The goals of the project was to test if Neural Networks would be a reliable and useful alternative to more traditional Game AI Architectures such as State Machines or Beaviour Trees.

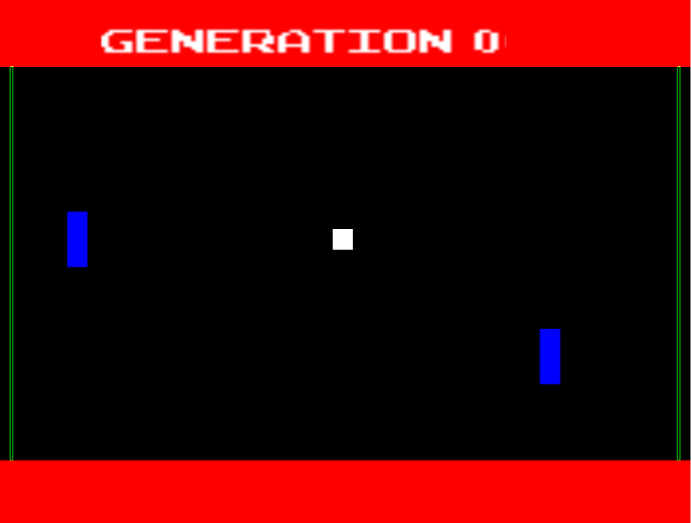

To do so, we implemented a Game AI for Pong and Pac-Man exclusively using Neural Networks Trained by Genetic Algorithm.

For Pong this was relatively simple given that the decission range boils down to moving up or down, and the only variable for decission making is the relative position between the ball and the paddle.

On the other hand, Pac-Man presented pretty interesting problems given that the game requires to navigate a 2D grid, while having other different variables such as the position of Pac-Man and the Ghosts, walls, pellets…

This was a very intersting engineering problem as we had to synthesize as many data as possible in the smallest ammont of memory, for the network not to be convoluted and for the algorithm to work properly.

For example, we ended up deciding that the best way to present the input data needed for Pac-Man’s movement was simply four “path score” integers, representing how beneficial taking a path is.

The way this works is traversing linearly the adjacent grid position at each one of the four cardinal directions, and computing a sum of values depending on what is located in that grid. Beneficial elements will have positive values (+10 if there is a pellet), hazards will have negative values (-50 if there is a ghost), etc.

For the output we discarded a cardinal system (up, down, left, right) and decided to take an approach using Pac-Man’s relative rotation (Turn left, turn right). This would mean that Pac-Man now needs two frames in order to turn 180º but it improved it’s learning rate immensly.

The conclusions we arrived to with this research were:

Neural Networks can be used for Game AI, but it’s use for general cases is not encouraged. The fact that we have very little control over how the network is going to behave other than setting up the network structure and the fitness function, makes it very hard to debug or direct to specific behaviors. For the general case, is better to stick to Behavior Trees or State Machines.

An appropiate use of Neural Networks for Game AI could be whenever there is a very complex system underneath that difficults coding a behavior procedurally. For example: Creating an AI Player for a physics-based movement game such as Getting Over It.

Agents using a Neural Network have an easier time learning whenever the input data has previously been strongly synthesized by the programmer (e.g. It is better to use the relative distances between required data instead of using its individual positions)